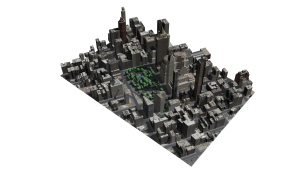

Above: A portion of New York’s Central Park and surrounding neighborhoods, as automatically parsed into GIS data and reconstructed into 3D models by Geopipe.

A new company called Geopipe builds 3D models using machine learning and AI, but with no photogrammetrists?

A couple of weeks ago I found myself on a video call with a guy named Christopher Mitchell (recently out of New York University after earning a PhD in computer science), and I couldn’t quite believe what I was being told: “We automatically extract buildings and other data from imagery and other sensor data,” Mitchell said.

“No photogrammetry?” I ask.

“No, it is a fully automated process. We can extract data for you in various LODs [level of detail] in vector format,” replies Mitchell.

At this point my mind is racing. Did I really hear that they can provide what I consider to be the holy grail? Furthermore, I am discussing data from the UK and not from anywhere near their base in New York, which doesn’t seem to be a problem for them; if I want more detail I just need to take some ground-level photos and send them over.

Gaming Roots

Geopipe was founded in 2016 by Mitchell, a lifelong train fan, and Thomas Dickerson, whom you can always spot wearing a Star Wars shirt. As friends and colleagues for more than 15 years, they came to the 3D data industry from computer science, not from a geospatial background.

In 2013, they were each working on PhDs, and each became fascinated by the idea of putting the real world into video game applications. Mitchell wanted to turn New York City into a perfect 1:1 Minecraft world in which he could build his own skyscrapers, and Dickerson wanted to teach computers to automatically generate virtual LEGO Architecture sets.

Together, they found that architects, engineers, game developers, and simulation creators were painstakingly hand-building models or hand-collecting data about the world, which is not cost-effective for GIS or AEC pros. It didn’t hurt that the 3D world market was, at time of forming the company, worth $7bn, rising to $17bn by 2020 (IBIS World & VFX).

Geopipe’s digital twin of the Flatiron district of New York.

Their Goal

From Geopipe’s inception, Mitchell and Dickerson applied their computer science knowledge to solve an industry milestone: using machine learning and AI algorithms to build a “pipeline” that extracts consistent and accurate information about terrain, buildings, trees, and other objects in the world, then turning those into accurate, semantically labeled 3D models.

Their pipeline can generate cities at a fraction of the time a huge team of photogrammetrists would need for the same task. For example, Geopipe asserts that it can generate a complete model of 1100 km2 of New York City in less than a day and that a customer can download a model of any size in 30 seconds, a bold claim indeed compared to hundreds of hours or months for a hand-built model.

From Geopipe’s roots in gaming, the goal was to generate models of what’s actually in the world as accurately as possible. Players need to be able to interact with doors that open, windows that are made of glass, and trees that actually lose their leaves during the winter. Thus, the photogrammetric approach wasn’t good enough: green shrink-wrapped blobs for trees and shadows burned into photos on the sides of buildings didn’t help game developers and wouldn’t provide the data that GIS and AEC professionals need. Hand-modeling wasn’t nearly fast or cost-effective enough.

Therefore, Geopipe creates models with information about what’s actually in the world and what everything is made of. That accuracy extends to measurements and locations: every Geopipe model is geolocated to where it should be placed in the real world, and most surfaces in Geopipe’s models are within 15cm (6 inches) of their correct location.

A screenshot from ContextSnap, inspecting semantic data in Geopipe’s New York City before downloading a 3D model.

The Pipeline

Under the hood, Geopipe uses raw data in the form of imagery, lidar, and maps, collected or purchased from a variety of sources.

They run the geographic data through their algorithmic pipeline (“geographic pipeline” becomes “Geopipe”), first identifying what’s actually in the world. They use machine learning and computer vision techniques to find buildings, trees, roads, sidewalks, water, and more, then determine the individual properties of each object.

For example, the pipeline can be used to find the exact contour of the terrain for every tree, the height, crown diameter, and an estimated species, and for buildings the façade material, roof type, number of floors, and location of doors and windows.

Some customers need this vector data directly, but most customers prefer 3D models. The Geopipe pipeline goes one step further, modeling each city that its extracted vector data describes as constructive solid geometry (CSG), texturing and coloring every surface and every object realistically, and then exporting mesh models.

For portability, Geopipe generates COLLADA (DAE) models, which customers can then download as DAE, FBX, OBJ, GLTF, GLB, or even OSG (OpenSceneGraph) models. Geospatial formats aren’t offered yet, but Mitchell said that they’re on the near-term roadmap.

Geopipe’s API and Unity SDK for immersive city visualization in action: above, looking uptown in Manhattan at midday.

Products and Uses

Right now, Geopipe is three years into developing its tech and products. It has released two products powered by the Geopipe pipeline: Geopipe ContextSnap, which lets customers download 3D models at different levels of details to use directly in their own applications, and the Geopipe SDK/API, which streams Geopipe’s cities into Unity and other software for immersive visualizations, VR, and games.

ContextSnap was developed after Mitchell and Dickerson spoke to hundreds of creative professionals and heard that all of them wanted to keep using the software and workflow with which they were already familiar.

ContextSnap is built around the open-source CesiumJS software. Originally designed just as a 2D map to select an area you want to download as a 3D model, it now contains 3D previews of Geopipe’s cities. You can click any structure to see what Geopipe knows about it, then select a block, a neighborhood, or even a larger area to download.

The first customers Geopipe worked with were in the architecture space, so you can download models streamlined as white massings, fully textured and detailed with every window filled in as a pane of glass in a frame, or somewhere in between.

Recently, Geopipe started supporting AEC customers who want to create interactive visualizations on 2D screens and VR experiences on 3D screens, as well as game developers looking to put the real world in their games. If you’ve ever needed to communicate a complex project to a non-technical stakeholder (or you’ve ever played Grand Theft Auto, a Spiderman game, or WatchDogs), you might already know how powerful interacting with the real world in 3D can be.

At the beginning of the year, Geopipe released a beta of its Geopipe API and SDK, initially created for Unity but applicable to other software or engines you might want to use. The SDK lets you stream Geopipe’s cities directly into software like Unity with no programming required. And in the interest of saving customers time and effort, it automatically loads highly detailed models close to the camera and low details far away, so you can have fine detail nearby while still being able to look at the iconic skylines miles away, all with a reasonable framerate on a consumer computer.

Future Vision

When Mitchell talks about their vision for the future, his eyes light up. “In five to ten years, we aim to be the company providing perfect virtual reproductions of the real world, copies that let you do anything you could do in the world of today (and more), copies that let you imagine any possibility for the world of tomorrow.”

Dickerson and Mitchell’s big picture is ambitious: “We’re working on making it possible for computers to understand every detail of real environments automatically, to massively simplify life for AEC professionals building new buildings and master plans, OEMs training autonomous cars and drones, developers and artists creating ever more immersive games, and even for special effects artists, insurance appraisers, and armchair tourists.”

From what I’ve seen of the models and data so far, this could be a game-changer. Already this has applications for BIM and visualization of projects from data collected as part of the project—but with the addition of the geospatial element in the near future, we could really be looking at the holy grail of automated city extraction.

Watch future issues of xyHt for case studies of this software being used in the AEC industries.